TPAMI 2018

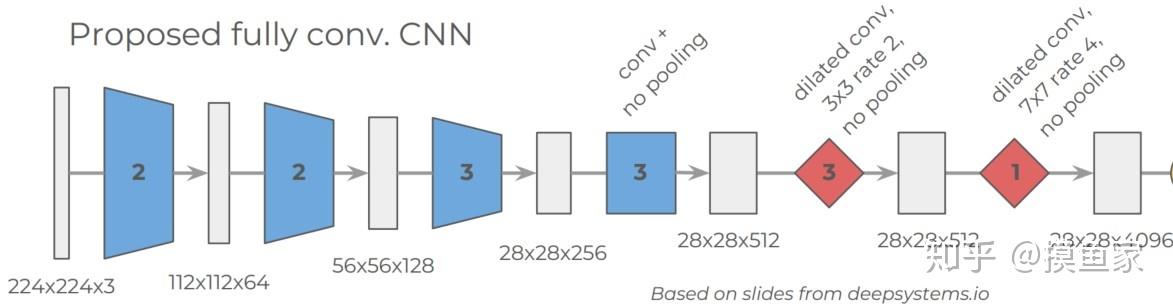

Deeplab v2 严格上算是Deeplab v1版本的一次不大的更新,在v1的空洞卷积和CRF基础上,重点关注了网络对于多尺度问题的适用性。多尺度问题一直是目标检测和语义分割任务的重要挑战之一,以往实现多尺度的惯常做法是对同一张图片进行不同尺寸的缩放后获取对应的卷积特征图,然后将不同尺寸的特征图分别上采样后再融合来获取多尺度信息,但这种做法最大的缺点就是计算开销太大。Deeplab v2借鉴了空间金字塔池化(Spatial Pyramaid Pooling, SPP)的思路,提出了基于空洞卷积的空间金字塔池化(Atrous Spatial Pyramaid Pooling, ASPP),这也是Deeplab v2最大的亮点。提出Deeplab v2的论文为DeepLab: Semantic Image Segmentation with Deep Convolutional Nets, Atrous Convolution, and Fully Connected CRFs,是Deeplab系列网络中前期结构的重要代表。

ASPP来源于R-CNN(Regional CNN)目标检测领域中SPP结构,该方法表明任意尺度的图像区域可以通过对单一尺度提取的卷积特征进行重采样而准确有效地分类。ASPP在其基础上将普通卷积改为空洞卷积,通过使用多个不同扩张率且并行的空洞卷积进行特征提取,最后在对每个分支进行融合。ASPP结构如下图所示。

除了ASPP之外,Deeplab v2还将v1中VGG-16的主干网络换成了ResNet-101,算是对编码器的一次升级,使其具备更强的特征提取能力。Deeplab v2在PASCAL VOC和Cityscapes等语义分割数据集上均取得了当时的SOTA结果。关于ASPP模块的一个简单实现参考如下代码所示,先是分别定义了ASPP的卷积和池化方法,然后在其基础上定义了ASPP模块。

### 定义ASPP卷积方法

class ASPPConv(nn.Sequential):

def __init__(self, in_channels, out_channels, dilation):

modules = [

nn.Conv2d(in_channels, out_channels, 3, padding=dilation,

dilation=dilation, bias=False),

nn.BatchNorm2d(out_channels),

nn.ReLU(inplace=True)

]

super(ASPPConv, self).__init__(*modules)

### 定义ASPP池化方法

class ASPPPooling(nn.Sequential):

def __init__(self, in_channels, out_channels):

super(ASPPPooling, self).__init__(

nn.AdaptiveAvgPool2d(1),

nn.Conv2d(in_channels, out_channels, 1, bias=False),

nn.BatchNorm2d(out_channels),

nn.ReLU(inplace=True))

def forward(self, x):

size = x.shape[-2:]

x = super(ASPPPooling, self).forward(x)

return F.interpolate(x, size=size, mode='bilinear',

align_corners=False)

### 定义ASPP模块

class ASPP(nn.Module):

def __init__(self, in_channels, atrous_rates):

super(ASPP, self).__init__()

out_channels = 256

modules = []

modules.append(nn.Sequential(

nn.Conv2d(in_channels, out_channels, 1, bias=False),

nn.BatchNorm2d(out_channels),

nn.ReLU(inplace=True)))

rate1, rate2, rate3 = tuple(atrous_rates)

modules.append(ASPPConv(in_channels, out_channels, rate1))

modules.append(ASPPConv(in_channels, out_channels, rate2))

modules.append(ASPPConv(in_channels, out_channels, rate3))

modules.append(ASPPPooling(in_channels, out_channels))

self.convs = nn.ModuleList(modules)

self.project = nn.Sequential(

nn.Conv2d(5 * out_channels, out_channels, 1, bias=False),

nn.BatchNorm2d(out_channels),

nn.ReLU(inplace=True),

nn.Dropout(0.1),)

# ASPP前向计算流程

def forward(self, x):

res = []

for conv in self.convs:

res.append(conv(x))

res = torch.cat(res, dim=1)

return self.project(res)

下图是Deeplab v2在Cityscapes数据集上的分割效果: